Enterprise Product Design & Research

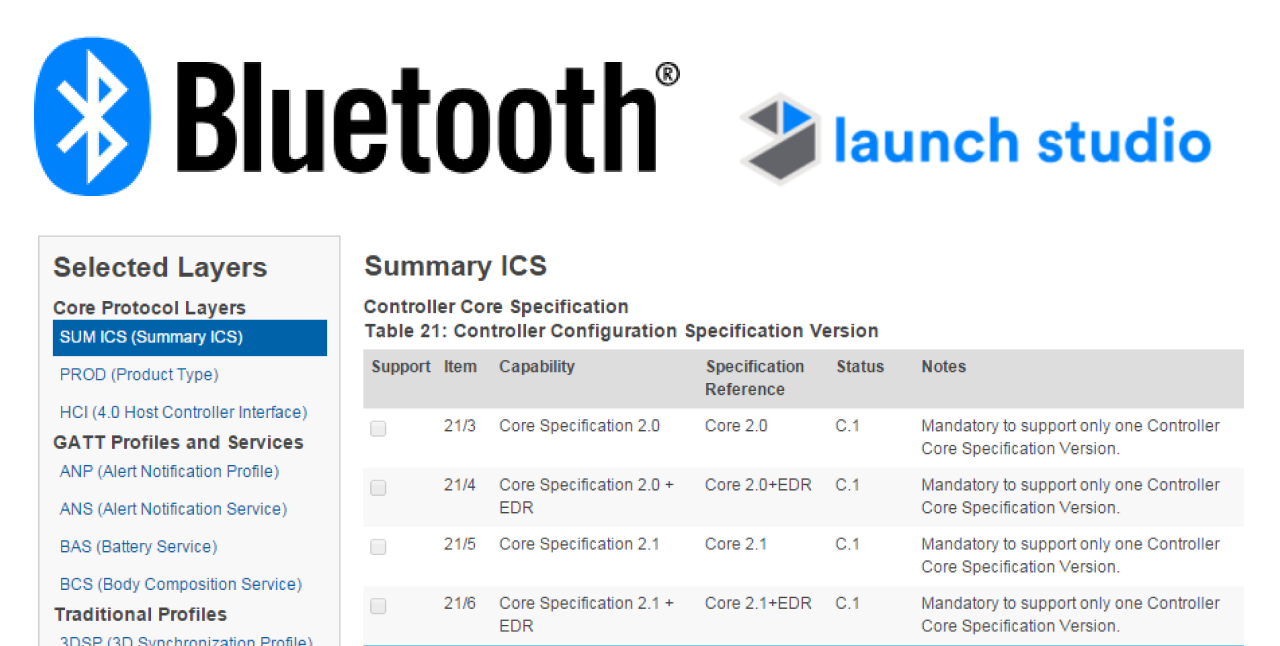

Bluetooth Launch Studio

- Timeframe: 22 Months (2014-2016)

- Personal role: Lead UX researcher and designer

Bluetooth is a wireless technology found in billions of devices. The Bluetooth Special Interest Group creates tools to help companies design, test, and bring products to market. Additionally, The SIG manages the Bluetooth trademark and coordinates development of technical specifications with the input of its 35,000 members.

Each new Bluetooth product must be tested to ensure compatibility and compliance to a technical specification. Launch Studio is a web app to help engineers determine testing requirements, document test results, and make informed decisions about their product's design and features.

Working with product managers and engineers, I led the two year process of creating Launch Studio. As the lead UX Designer and Researcher with additional program manager responsibilities, I drove the product vision from inception through beta releases, navigating complex technical policy and divergent user needs within an engineering-driven culture.

Launch Studio replaced a fragmented, siloed set of tools that provided $15 million annually, 75% of the SIG's revenue. Over the preceding decade, layers of complexity were added as policy changed. The process was so complex and opaque that a cottage industry of paid consultants grew to support this tool. Novice users were so intimidated that when testing was required, 97% choose to hire these consultants rather than learn the tool themselves.

As the lead UX designer and researcher, I collaborated with an Agile engineering team, Program Managers, a UX Engineer, and a UX Manager. For much of the project, I also managed stakeholders and communicated plans both internally and with members.

The Bluetooth SIG is a member driven organization with advisory boards for various technical roles. I worked with a board of experts, BTI, which defines testing procedures and policies. This is an independent group of expert users, but one with political power and insider status. I also worked with the BQEs, the independent consultants that guide clients through the testing process.

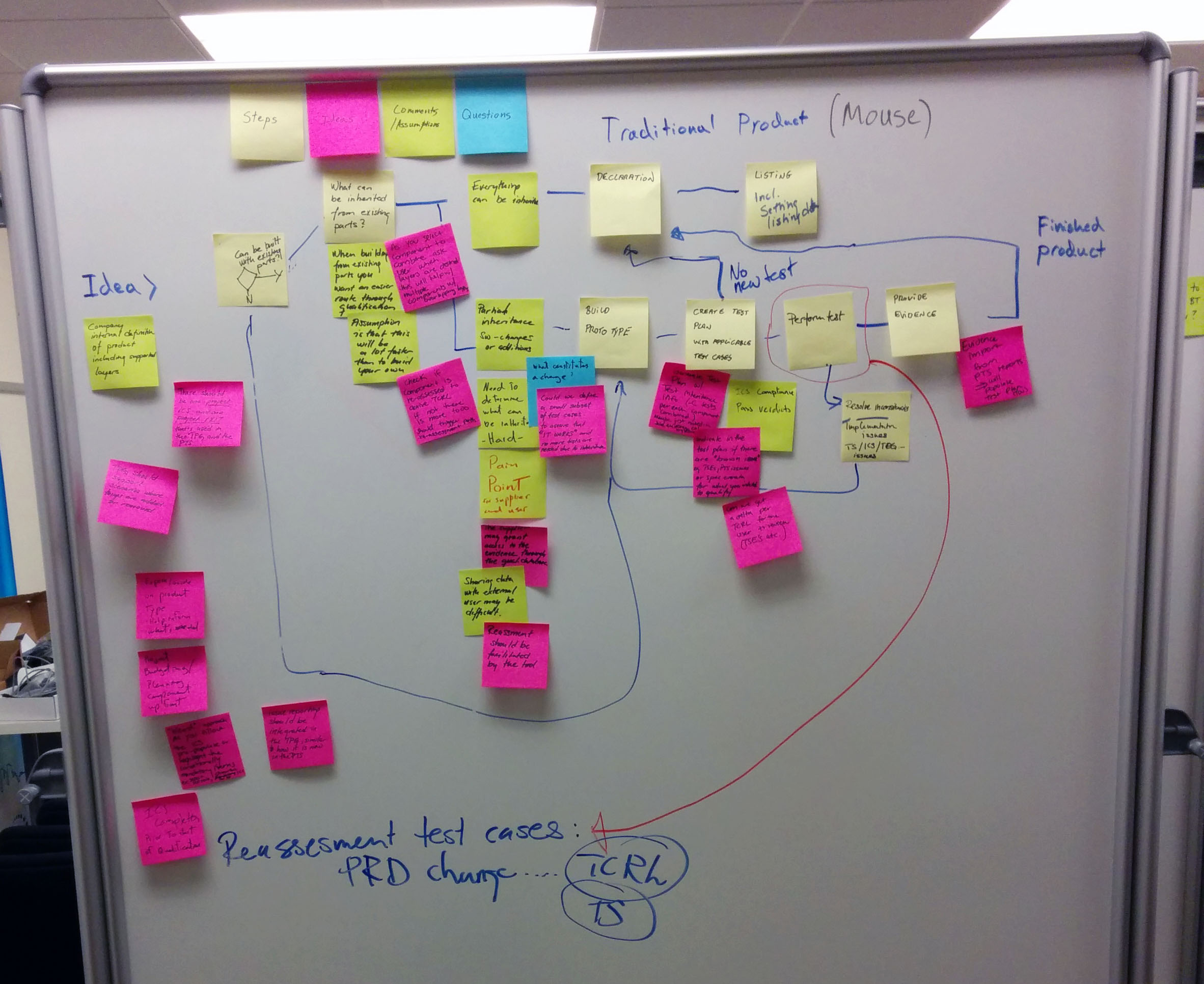

In a 3 day workshop, we explored the tools, pain points, and scope in terms of policy and regulation impediments. While the group had prepared multiple specific feature and UI suggestions, I kept them focused on the overall process. By stepping back from the user interface and examining the underlying process, we were able to discover the root causes of difficulties and better explore how the tools could work.

The research workshop and subsequent interviews revealed a handful of critical insights:

The research workshop exposed distinct user groups. The business began this project expecting to combine two main tools: TPG, a web app for determining test requirements, and PTS, an offline tool for conducting the testing. We discovered that not only was the existing approach working well, but that people using PTS were an entirely different role from those who used the online tools for making a test plan. The existing division of tools, though archaic, aligned well with the different user groups and their needs.

This group of experts asked for more features, rather than making the existing features work well. Individual interviews revealed an ulterior motive: an easy to use tool undermines consultants' business. They wanted the SIG to provide more expert-oriented features, but not to remove the barriers to entry for novices. Challenging the expert-centric culture of the existing tools - both internally and externally - became a cornerstone of the product.

Later, I spoke to a different user group: professionals who conduct the testing. Unlike the managers and policy makers from the earlier workshop, these testers knew the technology and terminology, but infrequently worked with the existing online tools. More commonly, they consumed test plans from the online tools and submitted their results to test managers.

A third group takes existing Bluetooth designs and sells them under their own brand. This is common for Bluetooth speakers and mice where one electronic design can be pretested and integrated with different physical forms. This group doesn't need to conduct testing, only completing a short form and paying a fee. Rebranders often do not understand the requirements, spending extra time and money unnecessarily requalifying their designs.

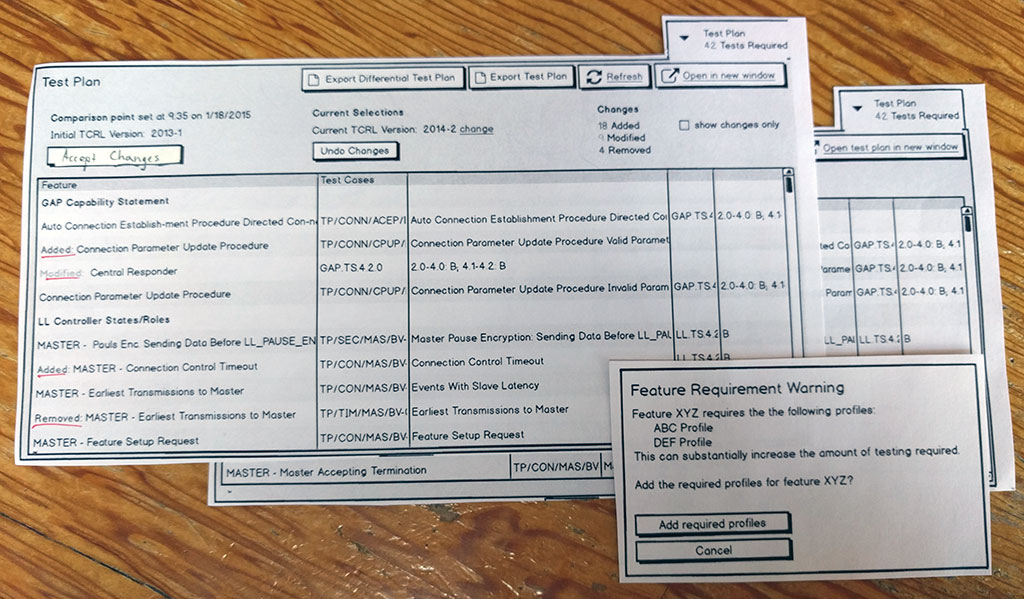

I conducted interviews by first asking about the existing process and tools, then testing a paper prototype. These conversations revealed new issues surrounding collaboration and tasks manually conducted outside of our tools.

Test plans are created by test managers using one of the online tools. The test plans are then used by test engineers, who run the tests on multiple platforms. Test engineers then document the test results and evidence, which is uploaded back to the online tools. Documentation is completed manually, usually in an excel spreadsheet. This process is tedious and prone to error. With thousands of tests and testers, plus multiple versions of the hardware and software under test, test managers never get a clear picture of their progress. Speaking with people who manage evidence but don't run their own tests, I was able to better understand the process and solve problems that would not have been identified with a narrow, UI-centric approach.

Research exposed a process with a complex set of testing requirements that could shift in scope depending upon seemingly minor details. People didn't know how to get started or how much work would be required. They needed a clear starting point to expose the required steps, adding certainty to the process and scope of the testing requirements.

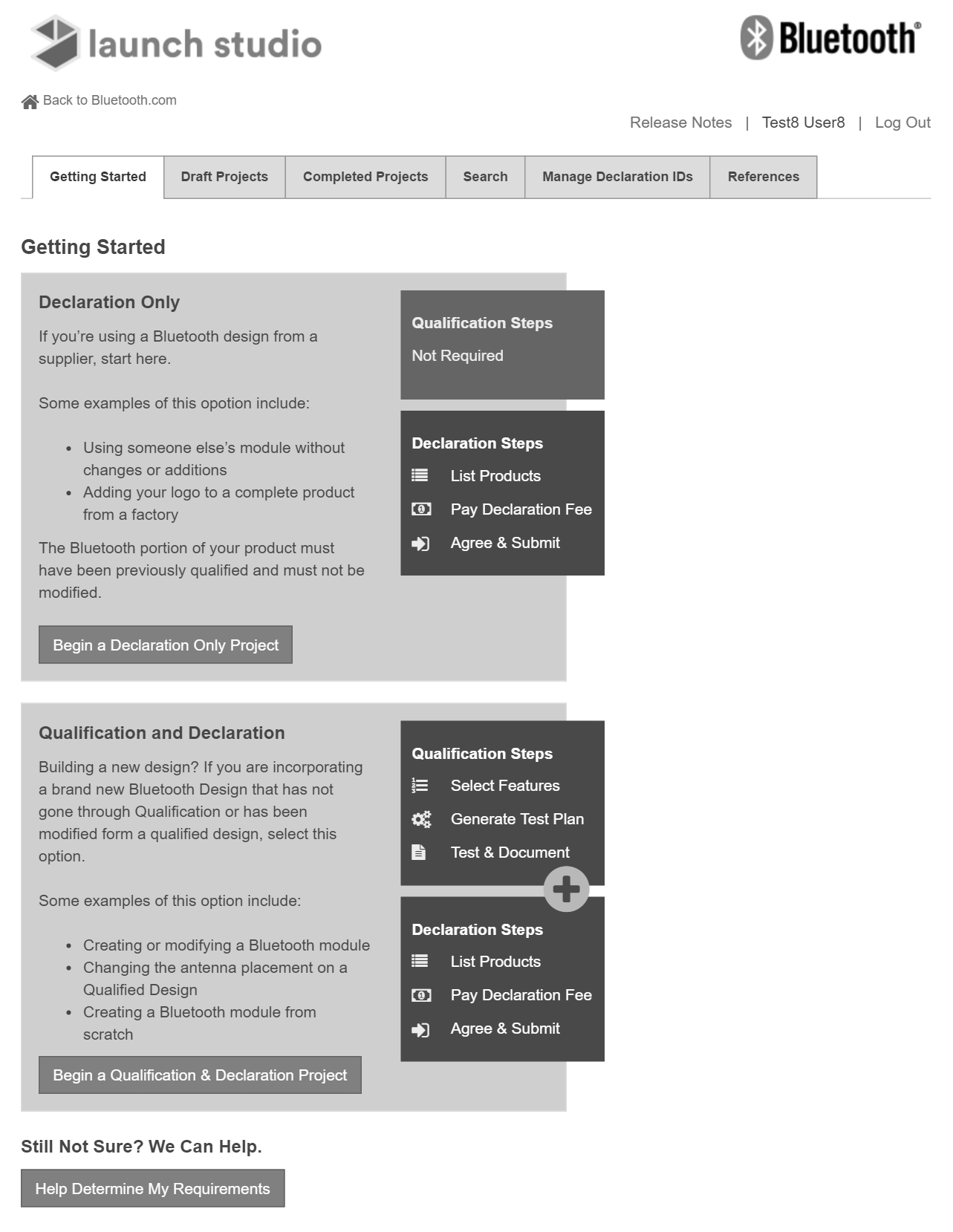

The underlying process that Launch Studio supports can be complex, but in most cases, it can be reduced to a simple product declaration form. The challenge is in directing users down the correct path - simple only when allowed, full and complex process only when required.

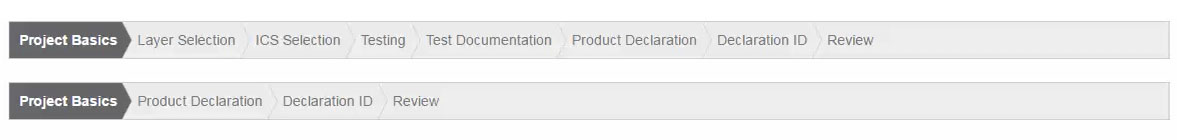

Launch Studio supports two main processes - Qualification and Declaration. For simple cases where the hardware changes are minimal, policy requires only a simple declaration. For scenarios where the Bluetooth hardware design has changed, qualification testing is required before declaration. Launch Studio uses two distinct paths to make the simple cases effortless, but without marginalizing the complex scenarios.

The core issue is determining whether a user needs to conduct qualification testing. The qualification process takes months and costs thousands of dollars, so it’s important to avoid it if not required. Unfortunately, the requirements are hundreds of pages long and still don’t provide a clear answer.

The first approach to this problem asked two simple questions at the beginning of every project, which alter the resulting steps based on the requirements.

When the design was tested, the experts resented having to answer questions when they already knew which process they needed. Furthermore, the questions oversimplified the process and didn’t capture every nuance of the requirements.

Charged with making the tool accessible for everyone, but unable to hide the technical requirements, the Getting Started page was created. This page orients novices by explaining that there are two processes, then provides simple examples of each scenario. Users consciously choose the correct path based upon their requirements. For most cases, this provides a clear answer.

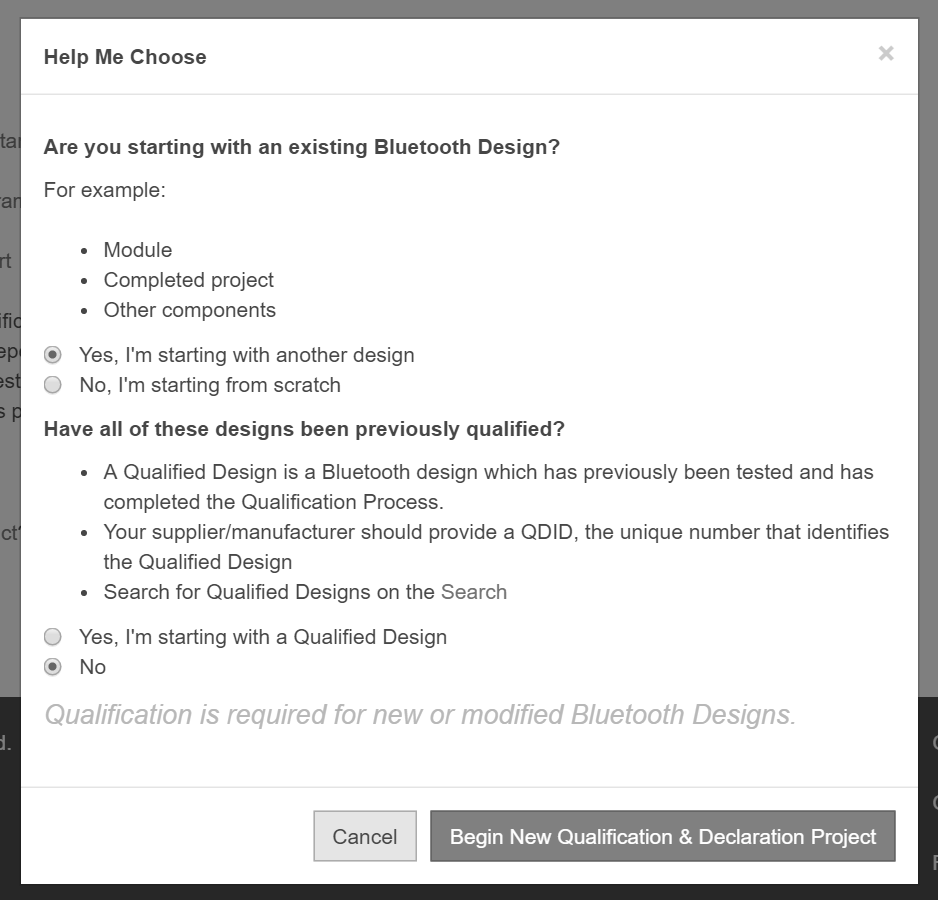

For those who still don’t know which path to take, a second method is attempted. The “Help me decide” button opens a modal box with yes and no questions. Through progressive disclosure, up to three questions are asked and a verdict is rendered.

This was a delicate compromise between distinct user groups. Experts insisted on power and direct control of all aspects. The other 90% of people needed help understanding the requirements. The help wizard offers direction and explanation of requirements while not hindering experts.

If the correct path remains unclear, links to customer service, in depth guides, and even the policy documents are provided. This provides everyone a simple way to get a resolution, but without hiding the necessary complexity.

An interactive HTML/CSS/JS prototype was developed early in the design process. This helped the UX team establish a pattern library and style guide. The prototype was used extensively for user testing and internal decision making. The style guide helped the engineering team understand low-fidelity Balsamiq prototypes, saving design team resources. After a private beta, Launch Studio was released in 2017 and the prototype was taken offline.

A System Usability Scale (SUS) Survey was conducted both before and after product launch. Note: “after” data includes the contributions of the team after my departure from the project.

Overall, Launch Studio saw a 15-point improvement over its predecessor (43.6 to 59.2). The percent of members either somewhat or strongly agreeing with survey question "I thought Launch Studio was easy to use" went up from 24% to 57%.

The qualitative sentiment was much improved, with some notable rough spots. Novices were more satisfied than established experts. One member noted that “It is much better than the old system, but some tasks are a little difficult to find (maybe because I'm used to the old system).”

The negative comments received generally fell into one of four categories: